Scientific publication

T. M. Lange, M. Gültas, A. O. Schmitt & F. Heinrich (2025). optRF: Optimising random forest stability by determining the optimal number of trees. BMC bioinformatics, 26(1), 95. Follow this LINK to the original publication.

Random Forest — A Powerful Tool for Anyone Working With Data

What is Random Forest?

Have you ever wished you…

Survival Analysis is a statistical approach used to answer the question: “How long will something last?” That “something” could range from a patient’s lifespan to the durability of a machine component or the duration of a user’s subscription.

One of the most widely used tools in this area is the Kaplan-Meier estimator.

Born in the…

Automl has become the gateway drug to machine learning for many organizations. It promises exactly what teams under pressure want to hear: you bring the data, and we’ll handle the modeling. There are no pipelines to manage, no hyperparameters to tune, and no need to learn scikit-learn or TensorFlow; just click, drag, and deploy.

At…

Why you should read this

As someone who did a Bachelors in Mathematics I was first introduced to L¹ and L² as a measure of Distance… now it seems to be a measure of error — where have we gone wrong? But jokes aside, there seems to be this misconception that L₁ and L₂ serve the same function — and…

Browsing GitHub the other day, I came across a library I’d never heard of before. It was called NumExpr.

I was immediately interested because of some claims made about the library. In particular, it stated that for some complex numerical calculations, it was up to 15 times faster than NumPy.

I was intrigued because, up…

Cybersecurity leaders are being asked impossible questions. “What’s the likelihood of a breach this year?” “How much would it cost?” And “how much should we spend to stop it?”

Yet most risk models used today are still built on guesswork, gut instinct, and colorful heatmaps, not data.

In fact, PwC’s 2025 Global Digital Trust…

Deploying your Large Language Model (LLM) is not necessarily the final step in productionizing your Generative AI application. An often forgotten, yet crucial part of the MLOPs lifecycle is properly load testing your LLM and ensuring it is ready to withstand your expected production traffic. Load testing at a high level is the practice of…

Recently, Sesame AI published a demo of their latest Speech-to-Speech model. A conversational AI agent who is really good at speaking, they provide relevant answers, they speak with expressions, and honestly, they are just very fun and interactive to play with.

Note that a technical paper is not out yet, but they do have a…

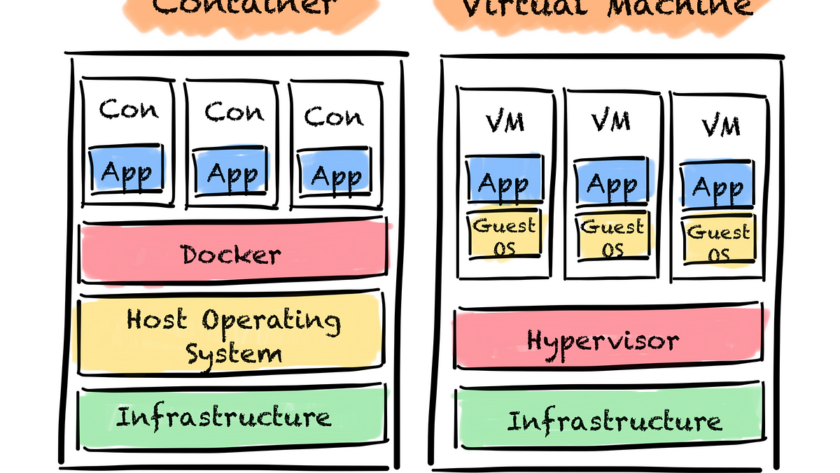

For a ML model to be useful it needs to run somewhere. This somewhere is most likely not your local machine. A not-so-good model that runs in a production environment is better than a perfect model that never leaves your local machine.

However, the production machine is usually different from the one you developed the…

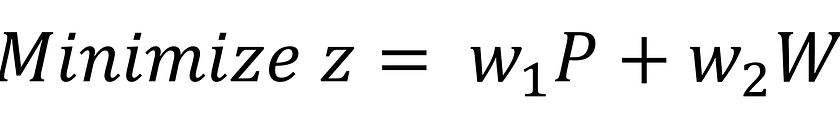

This is the sixth (and likely last) part of a Linear Programming series I’ve been writing. With the core concepts covered by the prior articles, this article focuses on goal programming which is a less frequent linear programming (LP) use case. Goal programming is a specific linear programming setup that can handle the optimization of…