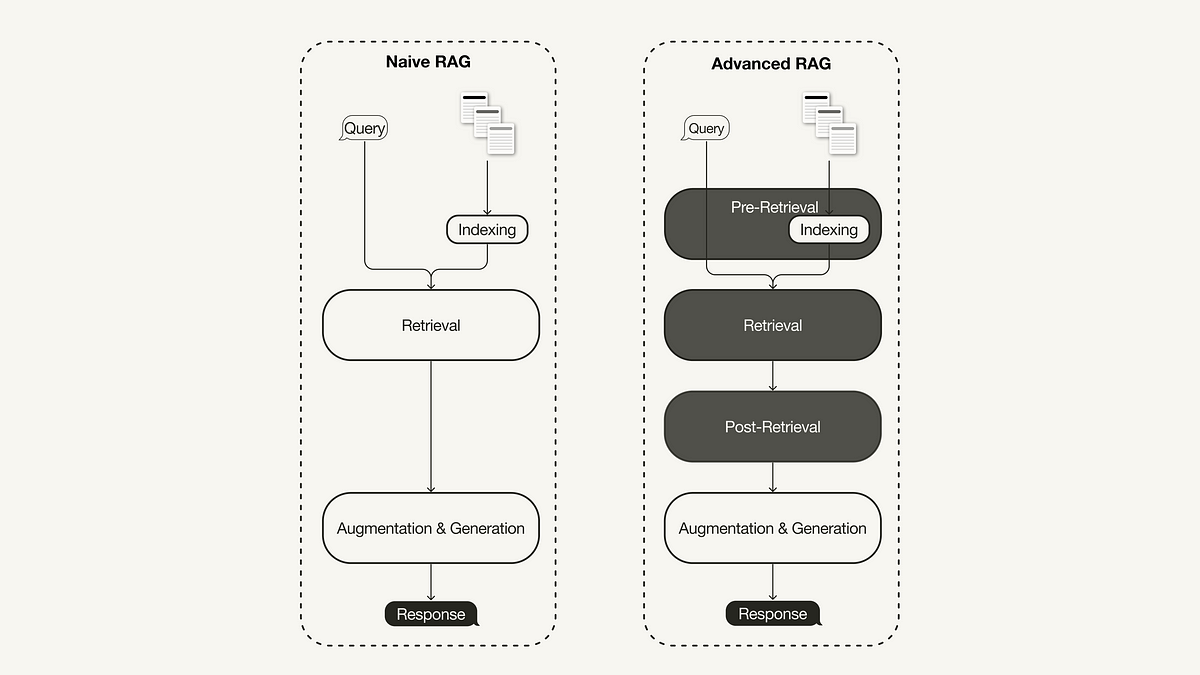

Optimising multi-model collaboration with graph-based orchestration Orchestra — photographer Arindam Mahanta by unsplashIntegrating the capabilities of various AI models unlocks a symphony of potential, from automating complex tasks that require multiple abilities like vision, speech, writing, and synthesis to enhancing decision-making processes. Yet, orchestrating these collaborations presents a significant challenge in managing the inner relations…