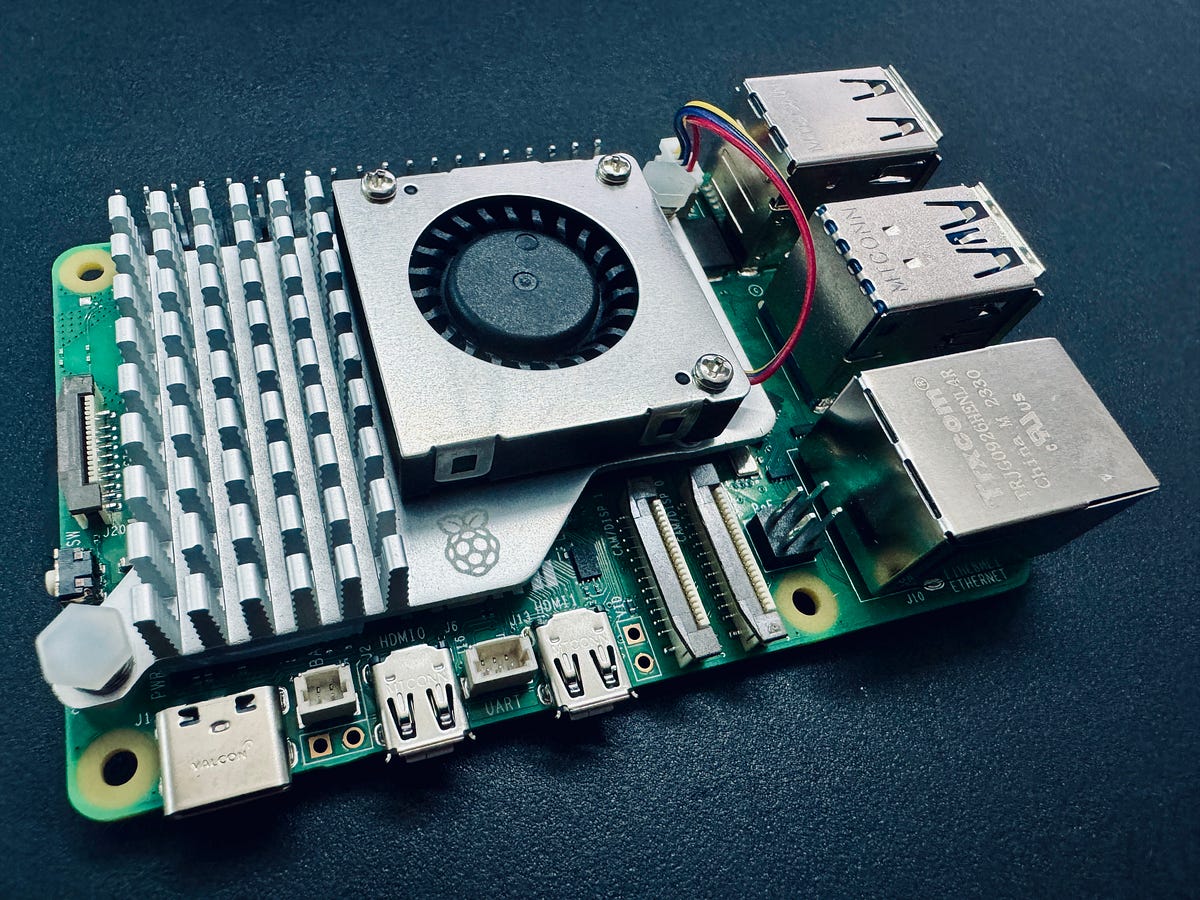

Get models like Phi-2, Mistral, and LLaVA running locally on a Raspberry Pi with Ollama Host LLMs and VLMs using Ollama on the Raspberry Pi — Source: AuthorEver thought of running your own large language models (LLMs) or vision language models (VLMs) on your own device? You probably did, but the thoughts of setting things…