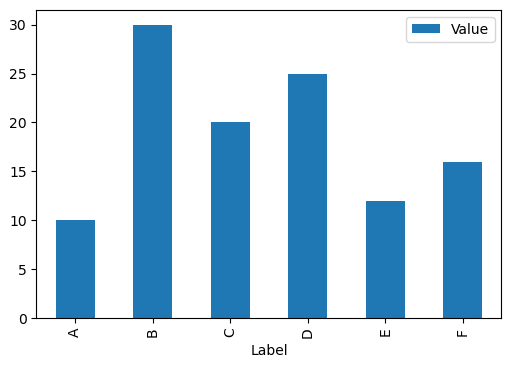

Data Science Fundamentals Beginner’s practical guide to discrete optimisation in Python 10 min read · 16 hours ago Data Scientists tackle a wide range of real-life problems using data and various techniques. Mathematical optimisation, a powerful technique that can be applied to a wide range of problems in many domains, makes a…